The Microservices Architecture has gained popularity for building complex, scalable software systems.

This approach organizes an application into a collection of loosely coupled, independently deployable services, with each microservice focused on a specific business capability. These services can be developed, deployed, and scaled independently, promoting flexibility and agility.

While microservices offer significant advantages—such as enhanced scalability, flexibility, and faster time to market—they also introduce challenges, particularly in data management.

A core principle of microservices is that each service should own and manage its data. This is often summarized as "don't share databases between services," emphasizing the importance of loose coupling and service autonomy to allow independent evolution.

However, it's essential to differentiate between sharing a data source and sharing data. Although sharing a database across services is discouraged, data sharing between services—through APIs or events—is often necessary and encouraged to ensure effective communication.

In this post, we’ll look at Microservices Data Design and Challenges Solution to Sharing data between microservices and the various advantages and disadvantages of specific approaches.

Microservices Data Design:

At it's core, microservices are built as Autonomous Entities and should have control over the data layer that they operate on. This essentially means that microservices cannot depend on a data layer that is owned by another entity. Thus to build autonomous services, it's required to have an Isolated Persistent Layer for Each microservice separately.

This section attempts to show patterns/practices for transforming centralized or shared database-based enterprise applications to microservices that are based on decentralized databases.

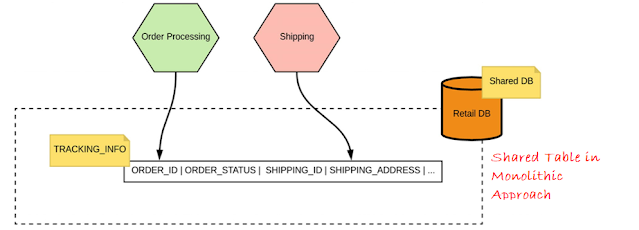

In Monolithic applications, usually has single centralized database (or a few) is shared among multiple applications and services. Below shows an example of online retail application - Where all services of the retail system share a centralized database (Retail DB).

In centralized database it's quite trivial to model a complex transactional scenario that involves multiple tables. Most RDBMS support such capabilities out of the box.

Despite such advantages, it has serious drawbacks, which does not allow to build autonomous and independent microservices.

- Single point of failure,

- Potential performance bottleneck due to heavy application traffic directed into a single database,

- Tight dependencies between applications, as they share same database tables.

Hence, with microservices you need to decentralize data management and each microservice has to fully own the data that it operates on.Microservice Arch - A Database per Microservice:

Having a database per microservice gives us a lot of freedom when it comes to microservices autonomy. For instance,

- Easily modify the database schema without worrying about the external consumers of the database.

- No external applications can access the database directly.

- Allows freedom to select the technology to be used as the persistent layer of microservices.

- Different microservices can use different persistent store technologies, such as RDBMS, NoSQL or other cloud services.

However, having a database per service introduces a new set of challenges, while it comes to the realization of any business scenario -

A). Sharing data between microservices (Soln: Keep Shared Data in Separate DB + MS)

B). Implementing Foreign Key Concepts (Soln: Synchron Lookup or Asynchronous Events)

C). Sharing Static Data (Soln: Shared Libraries)

D). Data Composition (Soln: Composite Services, Client Side Mash-ups)

E). Transactions - Major Problem! (Soln, 2 PC / 3PC Protocols)

Challenge_A). Sharing Data Between Microservices: In monolithic approach we used to have shared tables like Order_Shipping_Association etc.

These types of shared table scenarios are not compatible with microservice. Therefore, with a microservices architecture we need to get of such shared tables and rather access the data owned by another microservice is through a service interface or API.

Solution Steps:

- Identify the shared table and identify the business capability of data stored in that shared table.

- Move the shared tabled to a New dedicated database and on top of that database,

- Create a New service (with business capability) identified in the previous step.

- Remove all direct database references and only allow them to access the data via the services

Challenge_B). Foreign Keys: In monolithic approach storing Data across multiple tables and connecting it through foreign keys (FK), is a very common technique in relational databases. E.g. Order processing and Product Services, which use the order and product tables. A given order contains multiple products and the order table refers to such products using a foreign key, which points to the primary key of the product table.

These types of foreign key relations are not compatible with microservice per DB approach. Therefore, with a microservices architecture we need to think better solution:

- Using Synchronous Lookups

- Using Asynchronous Events

1). Using Synchronous Lookups: Used to access the data owned by other services. This technique is quite trivial to understand and at the implementation level, you need to write extra logic to do an external service call. We need to keep in mind that, unlike databases, we no longer have the referential integrity of a foreign key constraint. This means that the service developers have to take care of the consistency of the data that they put into the table. For example, when you create an order you need make sure (possibly by calling the product service) that the products that are referred from that order actually exist in the Product Table.

2). Using Asynchronous Events

Challenge_C). Shared Data: This is like Country, State etc data. Two approaches:

- Add another microservices with the static data would solve this problem, but it is overkill to have a service just to get some static information that does not change over time.

- Hence, sharing static data is often done with shared libraries for example, if a given service wants to use the static metadata, it has to import the shared library into the service code.

Challenge_D). Data Composition: Composing data from multiple tables/entities and creating different views is a very common requirement in data management. With monolithic databases (RDBMS), it's very easily to build the composition of multiple tables using joins and SQL statements.

However, in the microservices per DB approach, building data compositions becomes very complex, as we no longer can use the built in constructs such as joins to compose data as data is distributed among multiple databases owned by different services.

Therefore, with a microservices architecture we need to think better solution:

- Composite Services Or

- Client Side Mash-Ups

1). Composite Services: To create composition of data from multiple microservices you can create a composite service on top of the existing microservices. The composite service is responsible for invoking the downstream services and does the runtime composition of the data retrieved via service calls.

E.g. To create a composition of the orders placed and have customers who placed those orders - Create a new service – the 'Customer Order Composite Service'. This service will call the order processing and customer microservices from it and also implement the runtime data composition logic as well as the communication logic, inside the composite service.

2). Client Side Mash-Ups

Implement the same runtime data composition at the client side. I.e. Rather having a composite service, the consumer/client applications can call the required downstream services and build the composition themselves. This is often known as a client – side mashup.

Composite services or client side mashups are suitable when the data you have joined is relatively small, else UI may end up with memory exception.

Challenge_E). Transactions:

Transactions are quite commonly used in the context of a database but not limited to it. With monolithic applications and centralized databases, it is quite straightforward to begin a transaction, change the data in multiple rows (which can span across multiple tables), and finally commit the transaction.

With DB per microservices approach, the transactional boundaries may span across multiple services and databases. Therefore, implementation of such transactional scenarios is no longer straightforward as it is with monolithic applications and centralized databases.

Usual algorithm used in implementing distributed transactions is two-phase commit (2PC). in 2PC, distributed transactions uses a centralized process called a Transaction Manager to orchestrate the steps of a transaction, which brings limitation of single point of failure, leading to never completion of pending transactions.

Also there are few more limitations of 2PC - If a given participant fails to respond, then the entire transaction will be blocked, A commit can fail after voting and 2PC protocol assumes that if a given participant has responded with a yes, then it can definitely commit the transaction too.

Thus given the distributed and autonomous nature of microservices, using a distributed transactions/two-phase commit for implementing transactional business use cases is a complex, error-prone task that can hinder the scale ability of the entire system.

Therefore, with a microservices architecture we need to think better solution.

Summary Cheatsheet:

Hope this helps.

Arun Manglick